Understanding the brain’s visual system could inform the development of better artificial systems

For most of us, vision is a major part of how we perceive the world, as it provides a constant stream of information about the objects that surround us. Yet there’s a lot scientists still don’t know about how our brains gather and integrate this information into the cohesive images that we see.

Carlos Ponce, who recently became an assistant professor of neurobiology in the Blavatnik Institute at Harvard Medical School, is among those who are captivated by these questions about the visual system. He’s motivated by the idea that if researchers can understand how the visual system in the brain works, they can use this information to build better computational models.

“Imagine a computational model that can see and reason as well as a human does, but faster and 24 hours a day,” Ponce said.

Such models could power artificial visual systems with myriad applications, from medical imaging to driving to security.

Ponce spoke with Harvard Medicine News about his research, which combines computational models with electrophysiology experiments to explore the underpinnings of the visual system.

HM News: How did you become interested in visual neuroscience?

Ponce: I grew up on a farm, and I always liked animals, so I knew that biology was going to be my path. My interest in visual neuroscience was inspired by the work of neuroscientists who focus on outreach and making their research accessible to nonexperts.

Specifically, during college, I read this amazing article in Scientific American about how the visual system isn’t a fully connected, coherent system that matches up with our perception, but rather is separated into subnetworks that specialize in tasks such as recognizing shapes or tracking movements. I was fascinated by the idea that my perception, which is unified into this beautiful movie of vision, is being deconstructed by a part of my brain that I can’t access consciously and is a product of these smaller, computational demons that are doing their own processing. It made me think of myself not just as a unified essence but as a computational process. It was astounding to me. I thought that if I didn’t work full-time to understand how the brain worked, I would always wonder.

HM News: How are you studying the visual system in the brain?

Ponce: I study the parts of the visual system that are concerned with the analysis of shapes—so all the operations that allow us to recognize a face versus a hat, for example. These parts of the brain are called the ventral pathway, and they comprise neurons known to respond to complex images like pictures. I use macaque monkeys as a model, because of all experimental animals they have the brain that is the closest to that of humans.

If you have a human or a monkey look at a screen and you present pictures, some of the pictures will make the neurons fire a lot more—and often, those pictures are things that we can interpret, like faces or places. The responses of these neurons approximate what our perception is, but they don’t always match up exactly. It’s more complicated than that.

HM News: What does computation contribute to this endeavor?

Ponce: If we want to understand what these neurons do, we can go through images and find the ones that most strongly activate them. In the classical approach, scientists show a monkey pictures of very simple shapes, and see how its neurons respond. The pictures represent a hypothesis, and the neural response is an evaluation of that. This approach is integral to visual neuroscience, and has given us a lot of good understanding of the brain, but it is limited by our own imaginations and hunches and biases. Sometimes we don’t know what we don’t know. Moreover, this approach doesn’t allow us to predict how neurons will respond to random pictures of the world. If we want to build a visual system in a computer that is as good as the one in our brain, it needs to act across all kinds of images.

In the past five years or so, the machine learning community has developed computational models that can learn from millions of pictures of the world. The models learn motifs and shapes that can not only be used to reconstruct existing images, but can be used to create entirely new images. We use these amazing computational models in our macaque studies.

Our approach is exciting because we mostly stand back and let the cooperation between neurons and machine intelligence produce results that we weren’t expecting. Every time we study a neuron somewhere in the brain, that neuron transmits its information directly to us via machine learning. We’re no longer limited by our own imaginations and our own language in trying to understand the visual system. It’s now telling us the important features in the world that we should be paying attention to.

HM News: In your 2019 Cell paper, you figured out how to integrate these kinds of computational models into your research. What did you reveal about the visual system?

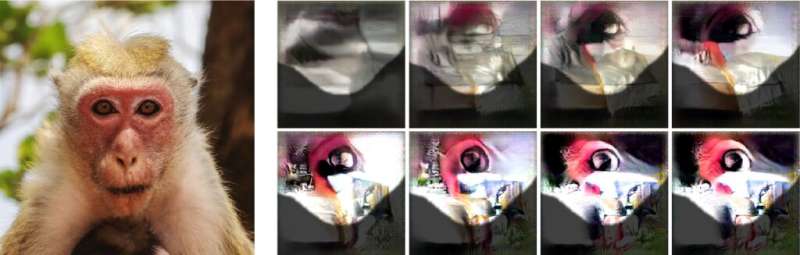

Ponce: When I was a postdoc at HMS working with Margaret Livingstone, we linked the models to neurons in the macaque visual system so that we could see pictures created from scratch that make the neurons respond more and more.

The first time we tried this, we recorded from a set of neurons in a part of the macaque brain that responds to faces. Sure enough, starting from noise, an image began to grow in the computational model that looked like a feature of a face. Not a whole face, just an eye and a surrounding curve. The neuron was going crazy firing, essentially saying, wow, this is a perfect match for what I’m encoding. Our discovery was that you can couple computational models to neurons in the macaque brain that are visually responsive, and have the neurons guide the model to create pictures that activate them best.

However, we were puzzled by some of the pictures that were created. Some made a lot of sense, like parts of faces or bodies, but others didn’t look like any one object. Instead, they were patterns that cut across semantic categories—they sometimes happen in faces, and they sometimes happen in bodies or random scenes. We realized that the neurons in the macaque brain are learning specific motifs that don’t necessarily fit our language. The neurons have a language of their own that is about describing the statistics of the natural world.

HM News: You recently published a sequel to this research in Nature Communications. How did it build on your earlier work?

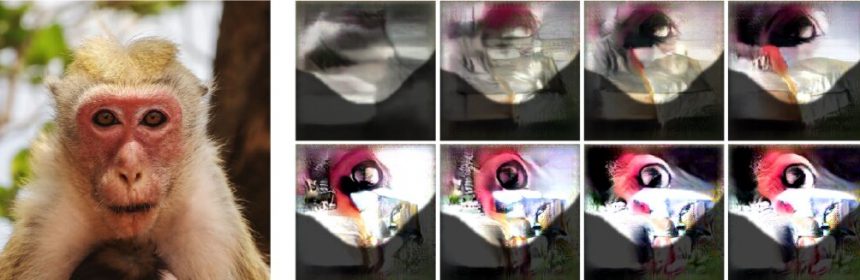

Ponce: In our new paper in Nature Communications we applied this computational method to different parts of the macaque brain that are concerned with visual recognition of shapes. This included neurons in the posterior parts of the brain that respond to very simple objects and neurons in the anterior parts that respond to more complex objects. We were able to quantify the complexity of the information that these neurons encode and found that it has an intermediate level of density; it’s not as simple as a line image and not as complex as a photograph.

Then we wondered where the images are coming from. We know that macaques, like all social creatures, including humans, look at a lot of faces. It turned out that a lot of the fragments of information we gathered from the neurons had features similar to those in faces. We thought maybe the information in the monkeys’ brains is related to where they look, and they learn important patterns of the visual world through experience. We did experiments where we let the macaques look at thousands of pictures, and compared the parts of the pictures that drew their attention to the information on synthetic shapes we got directly from their brains. Sure enough, the macaques tended to look at parts of the pictures that were similar to the features encoded by their neurons. That gives us a clue that during development, the brain extracts important patterns from the world and stores those patterns in neurons.

HM News: What do you want to do next?

Ponce: There are so many questions that we still want to answer. We now know that we can identify features in the world that activate individual neurons. However, the brain doesn’t work one neuron at a time. It works with ensembles of neurons that all respond to visual information at the same time. We want to extend our approach to characterize full populations of neurons. We want to know if somebody gives us a pattern of activity for neurons, can we figure out what features of the visual world it represents. We’re exploring whether we can use our method to reconstruct images that the macaque has seen.

Another important point is that the brain organizes neurons based on function. For example, neurons that respond to faces tend to be clustered together and are further away from neurons that respond to natural scenes and places. So how does the brain decide where to put neurons? We don’t yet have that map, but I think our approach will be very good at trying to identify the topography.

Ultimately, we are trying to characterize the patterns that the brain learns and identify the neural networks that contain this information. Once we do this, we should be able to develop computational models that encode the same information and can be used to improve artificial visual systems.

I am particularly intrigued by the potential clinical applications. During my medical training I saw automated systems analyzing cervical tissue samples, and I realized that it would make so much sense to have an artificial visual system that can make sure the pathologist doesn’t miss anything. Hopefully, better artificial visual systems could be used in clinical settings to improve screening and save lives.

HM News: You were initially inspired by scientists who do outreach. Are you incorporating outreach into your new position?

Ponce: Absolutely. I hope to replicate my own introduction to science. When I emigrated to the United States from Mexico, I understood very little about academia. During high school somebody told me that you can get a job as a lab technician, and one summer I did. It was an amazing revelation to me of what science could be like.

Source: Read Full Article