Hearing is believing: Sounds can alter our visual perception

Perception generally feels effortless. If you hear a bird chirping and look out the window, it hardly feels like your brain has done anything at all when you recognize that chirping critter on your windowsill as a bird.

In fact, research in Psychological Science suggests that these kinds of audio cues can not only help us to recognize objects more quickly but can even alter our visual perception. That is, pair birdsong with a bird and we see a bird—but replace that birdsong with a squirrel’s chatter, and we’re not quite so sure what we’re looking at.

“Your brain spends a significant amount of energy to process the sensory information in the world and to give you that feeling of a full and seamless perception,” said lead author Jamal R. Williams (University of California, San Diego) in an interview. “One way that it does this is by making inferences about what sorts of information should be expected.”

Although these “informed guesses” can help us to process information more quickly, they can also lead us astray when what we’re hearing doesn’t match up with what we expect to see, said Williams, who conducted this research with Yuri A. Markov, Natalia A. Tiurina (École Polytechnique Fédérale de Lausanne), and Viola S. Störmer (University of California, San Diego and Dartmouth College).

“Even when people are confident in their perception, sounds reliably altered them away from the true visual features that were shown,” Williams said.

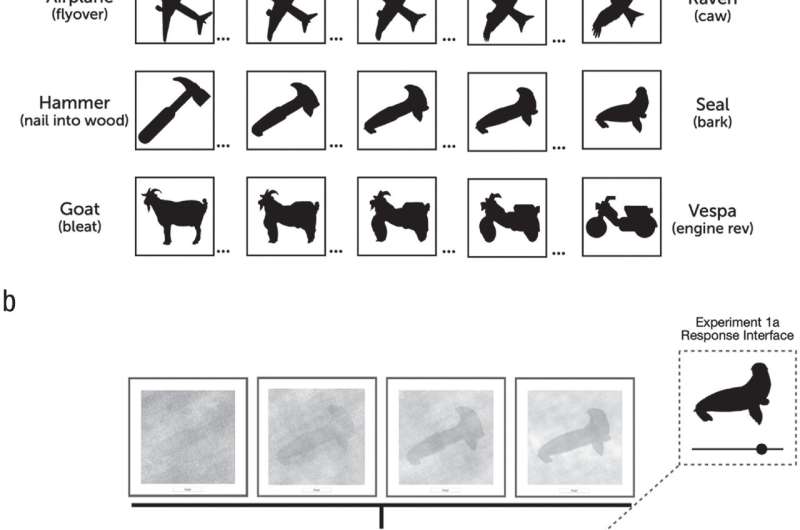

In their first experiment, Williams and colleagues presented 40 participants with figures that depicted two objects at various stages of morphing into one another, such as a bird turning into a plane. During this visual discrimination phase, the researchers also played one of two types of sounds: a related sound (in the bird/plane example, a birdsong or the buzz of a plane) or an unrelated sound like that of a hammer hitting a nail.

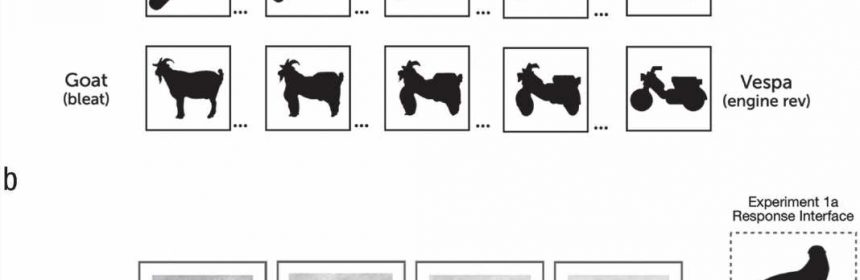

Participants were then asked to recall which stage of the object morph they had been shown. To show what they recalled, they used a sliding scale that, in the above example, made the object appear more bird or more plane-like. Participants were found to make their object-morph selection more quickly when they heard related (versus unrelated) sounds and to shift their object-morph selection to more closely match the related sounds that they heard.

“When sounds are related to pertinent visual features, those visual features are prioritized and processed more quickly compared to when sounds are unrelated to the visual features. So, if you heard the sound of a birdsong, anything bird-like is given prioritized access to visual perception,” Williams explained. “We found that this prioritization is not purely facilitatory and that your perception of the visual object is actually more bird-like than if you had heard the sound of an airplane flying overhead.”

In their second experiment, Williams and colleagues explored whether this effect was specific to the visual-discrimination phase of perceptual processing or, alternatively, if sounds might instead shape visual perception by influencing our decision-making processes. To do so, the researchers presented 105 participants with the same task, but this time they played the sounds either while the object morph was on screen or while participants were making their object-morph selection after the original image had disappeared.

As in the first experiment, audio input was found to influence participants’ speed and accuracy when the sounds were played while they were viewing the object morph, but it had no effect when the sounds were played while they reported which object morph they had seen.

Finally, in a third experiment with 40 participants, Williams and colleagues played the sounds before the object morphs were shown. The goal here was to test whether audio input may influence visual perception by priming people to pay more attention to certain objects. This was also found to have no effect on participants’ object-morph selections.

Taken together, these findings suggest that sounds alter visual perception only when audio and visual input occur at the same time, the researchers concluded.

“This process of recognizing objects in the world feels effortless and fast, but in reality it’s a very computationally intensive process,” Williams said. “To alleviate some of this burden, your brain is going to evaluate information from other senses.” Williams and colleagues would like to build on these findings by exploring how sounds may influence our ability to locate objects, how visual input may influence our perception of sounds, and whether audiovisual integration is an innate or learned process.

More information:

Jamal R. Williams et al, What You See Is What You Hear: Sounds Alter the Contents of Visual Perception, Psychological Science (2022). DOI: 10.1177/09567976221121348

Journal information:

Psychological Science

Source: Read Full Article